AI Governance in 2025: A Practical Guide for Business Leaders

How to Navigate AI Regulation, Bias Prevention, and Governance Frameworks Without Getting Left Behind

If you're reading this, you're likely feeling the pressure around AI governance that's building across industries. It's 2025, and your team is almost certainly using AI tools for your business—whether that's ChatGPT for marketing ideas or automated hiring systems. What's become clear is that strong AI governance isn't optional anymore; it's essential for protecting your business and maintaining competitive advantage.

The AI governance landscape has shifted dramatically, and businesses that haven't adapted are facing real challenges. Here's what the data shows: A McKinsey report on the State of AI found that 78% of companies are utilizing AI, but only 21% have effectively integrated it into their workflows. Meanwhile, a recent Deloitte survey revealed that only a quarter of corporate leaders felt truly prepared to handle the governance and risk challenges associated with adopting AI.

The stakes are high. Countries like the European Union and Brazil are implementing comprehensive AI regulations with serious penalties. Here in the U.S., while federal oversight remains fragmented, state-level enforcement is accelerating. As of July 2025, all 50 U.S. states, along with several key territories, have introduced AI-related legislation.

This guide breaks down AI compliance requirements, bias prevention, and risk management in practical terms. Whether you're in HR using AI for recruiting, on a marketing team leveraging content generation, or an operations manager implementing AI workflows, you'll find actionable strategies that work in real business environments.

What you'll learn:

Why AI governance is now a business imperative

How global AI regulations affect your operations and risk exposure

A practical AI governance framework you can implement

How to turn AI governance into competitive advantage

Why AI Governance Matters for Your Business Right Now

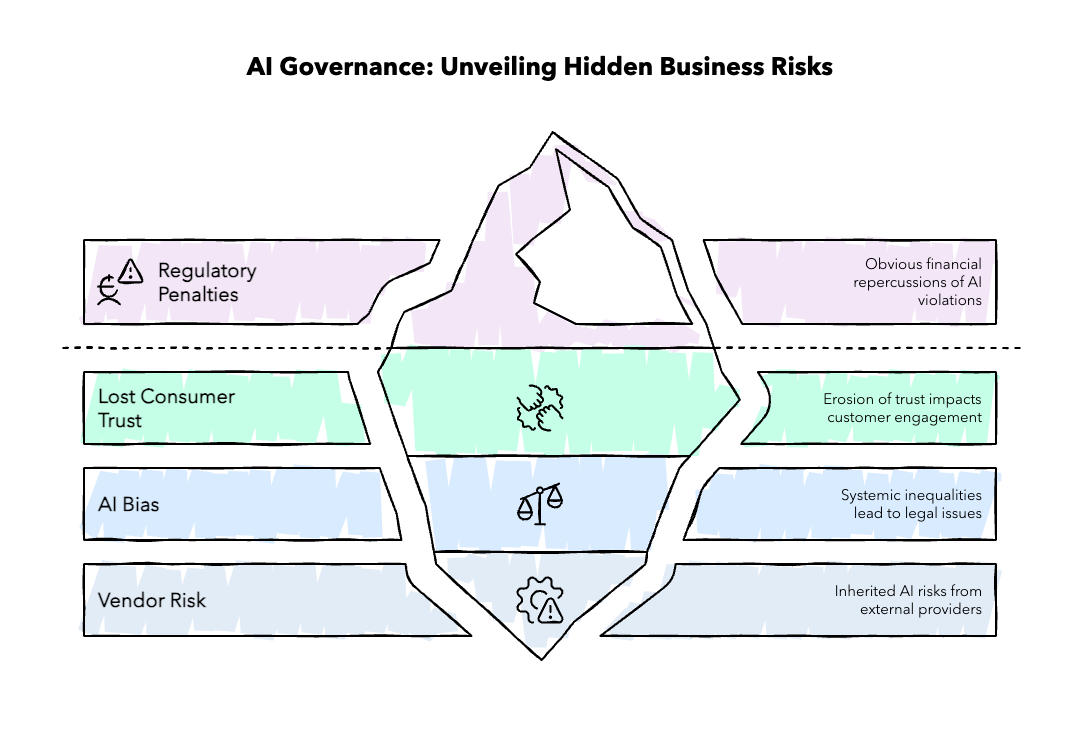

The risks are real, but they're also manageable when you understand what you're dealing with. Let's break down why this deserves your immediate attention.

The Financial Impact

The regulatory penalties are substantial and create cascading effects throughout organizations. The EU AI Act imposes fines of up to €35 million or 7% of global annual turnover, making these among the most expensive regulatory violations in Europe, exceeding even GDPR fines.

But financial risk extends beyond regulatory penalties. Companies face class-action lawsuits from affected individuals, emergency remediation costs when systems must be rebuilt, forensic audit expenses to prove compliance, and opportunity costs when key personnel spend months dealing with regulators instead of growing the business. AI violations don't just cost money—they can derail business momentum for years.

The good news: these risks are preventable with proper governance frameworks.

The Cost of Lost Trust

Consumer trust in AI is fragile. Only 23% of American consumers trust businesses to handle AI responsibly, according to a recent survey by Gallup and Bentley University. This trust deficit reflects legitimate concerns about how AI systems make decisions affecting people's access to opportunities, services, and fair treatment.

When trust erodes, business metrics suffer. Customer acquisition costs increase when people avoid AI-powered services. Employee retention drops when workers don't trust the systems they're required to use. Partnership opportunities disappear when other businesses question your AI practices.

The question becomes: are you building technology that people want to engage with, or technology they want to avoid?

The Bias Challenge

AI bias isn't just an ethical concern—it's a business liability that can create serious legal and reputational problems. Biased AI systems disproportionately affect hiring, lending, healthcare, and law enforcement decisions, perpetuating systemic inequalities and creating legal exposure under anti-discrimination laws.

These systems make decisions that affect people's lives, livelihoods, and opportunities. When an AI hiring tool systematically screens out qualified candidates based on hidden biases, or when healthcare AI fails to recognize symptoms in specific populations, the human impact extends far beyond any regulatory penalty.

The challenge is that bias often emerges subtly through training data, model design, or deployment contexts that seem neutral but produce discriminatory outcomes. Without systematic bias detection and mitigation, companies face regulatory penalties while inadvertently limiting opportunities for talented individuals.

Vendor Risk

Most businesses don't build AI—they buy it. Whether it's your CRM's new AI features, your hiring platform's resume screening, or your customer service chatbot, you're inheriting AI risks from your vendors. Without proper governance, you lack visibility into:

What data your AI tools are trained on and whether it contains biases

How they make decisions that affect your customers

Whether they comply with regulations in your markets

What happens when they fail or produce discriminatory results

The liability doesn't disappear because you're using a vendor's AI system. You're still responsible for outcomes and compliance in your jurisdiction.

2025 Global AI Regulations: What Businesses Need to Know

The global regulatory landscape breaks down into three distinct approaches, each with different implications for your business operations.

Comprehensive Risk-Based Frameworks

Europe: The EU AI Act

Europe established the global standard with the EU AI Act. If you have European customers, use European AI services, or plan to expand to Europe, these rules apply to you.

Key requirements: Some AI uses are prohibited (social credit scoring), others require extensive documentation and audits before deployment, and violations can cost up to €35 million or 7% of global revenue (USD 38 million). Most provisions take effect in August 2026, but prohibited practices are already in force.

Brazil: Following the EU Model

Brazil is implementing a similar framework with fines up to R$50 million (approximately USD 10 million) per violation. The Senate approved the framework, and it's proceeding through the legislative process.

Business impact: Legal certainty but significant compliance costs. For global operations, these become baseline requirements because they're the most comprehensive.

Innovation-First Approaches

United States: Fragmented State-Level Action

The U.S. has no federal AI law. Instead, all 50 states plus several territories have introduced or are considering AI legislation. Congress has debated but not enacted federal law that would preempt state regulation. Sector-specific regulators are adapting existing rules to cover AI applications.

United Kingdom: Principles-Based Regulation

The UK lets existing regulators handle AI within their current authority rather than creating new AI-specific laws. They've delayed comprehensive AI legislation until summer 2025 while focusing on promoting AI innovation.

Japan: Soft Regulation

Japan passed an AI promotion law that provides guidance rather than binding requirements. Companies aren't required to comply, but the government can publicly identify non-compliant businesses.

Business impact: Lower immediate compliance costs but higher regulatory uncertainty. Good for rapid deployment, but requires agility as rules evolve.

Sector-Specific Approaches

China: Content Control Focus

China doesn't have comprehensive AI regulation, but it intensely regulates AI-generated content. Starting September 1, 2025, all AI-generated content must be clearly labeled, and platforms are liable for detection and marking.

Business impact: If you serve Chinese markets or create content, immediate compliance systems are required. Otherwise, you have more operational flexibility.

International Coordination

OECD Voluntary Framework

Major tech companies (Amazon, Google, Microsoft, OpenAI) participate in a voluntary reporting framework launched in February 2025, promoting transparency through regular disclosures.

G7 Code of Conduct

The G7 established common principles through the Hiroshima AI Process, focusing on advanced AI systems and safety measures.

Strategic Planning Implications

Small businesses: Start with voluntary frameworks like NIST guidelines. Focus on markets where you operate.

Global operations: Plan for European rules as your baseline—they're the most comprehensive and expensive to violate.

Sector-specific businesses: Monitor your industry regulators who are adapting existing rules to cover AI.

The key insight: Regulation is happening but inconsistently. Companies that establish governance practices can now adapt more easily as regulatory approaches converge or diverge.

A Practical AI Governance Framework

Based on the NIST AI Risk Management Framework and OECD guidelines, here's a practical approach that scales for companies of all sizes:

Step 1: Establish Governance Foundation

Create an AI Governance Committee

Include representatives from legal, compliance, IT, and key business units. Start with monthly meetings, transitioning to quarterly once processes are established. Assign clear accountability with defined approval processes.

Develop AI Usage Policies

Define approved AI tools and authorized users

Establish approval processes for new AI implementations

Create escalation procedures for AI incidents

Documentation Requirements

Maintain inventory of all AI systems and tools

Track data sources, decision-making processes, and business impact

Establish version control for AI models and policies

Step 2: Risk Assessment and Compliance Mapping

AI Landscape Mapping

Identify all AI systems currently in use (including vendor tools). Categorize by risk level: minimal, limited, high risk, prohibited. Assess impact on individuals and communities.

System Evaluation Framework

People & Planet: Who is affected? What are societal impacts?

Economic Context: What business processes does it influence?

Data & Input: What data is used? How is quality ensured?

AI Model: How does it make decisions? Can you explain outputs?

Task & Output: What actions result from AI decisions?

Critical Assessment Questions

Does this system make decisions about individuals (hiring, lending, healthcare, housing)?

Could biased outputs perpetuate inequality or limit opportunities?

Is the system transparent and explainable to affected parties?

Do we have meaningful human oversight to prevent harm?

Can we detect and correct errors before they impact people?

Does this system expand or limit human potential and opportunity?

Step 3: Implement Monitoring and Risk Controls

Bias Detection and Mitigation

Conduct quarterly bias audits for systems affecting people's opportunities (hiring, lending, healthcare, housing)

Test AI systems across demographic groups before deployment, with focus on historically marginalized communities

Implement algorithmic impact assessments using frameworks like ISO/IEC 42005:2025

Establish bias incident response procedures prioritizing rapid correction

Train users to recognize and report potential bias

Create feedback mechanisms for people affected by AI decisions

Continuous Monitoring

Deploy automated monitoring for accuracy, drift, and fairness metrics

Establish model retraining schedules and performance thresholds

Create alerts for performance degradation or anomalous outputs

Monitor for disparate impact across protected classes

Human Oversight Requirements

Require human review for high-stakes decisions affecting people's opportunities or access

Train users to recognize AI limitations and biases

Establish override procedures when AI systems fail

Ensure oversight includes diverse perspectives reflecting served communities

Data Governance

Implement data quality checks and bias detection

Create data retention and deletion procedures

Maintain privacy standards throughout AI development and deployment

Step 4: Vendor Risk Management

Vendor Assessment Questions

What can their AI do, and how were models trained?

Do they test for bias and have evidence of mitigation efforts?

How do they prevent systems from perpetuating existing inequalities?

Can we audit their bias testing and understand decision-making processes?

What's their response plan for system failures or discriminatory results?

Can they provide bias reports demonstrating fairness across groups?

Does their development team include diverse perspectives?

Contractual Protections

Include AI-specific liability allocation clauses

Require vendor compliance with applicable regulations

Establish audit rights and performance monitoring requirements

Define incident response and notification procedures

Step 5: Incident Response and Remediation

Response Plan Development

Develop AI-specific response playbooks covering bias, accuracy failures, and security breaches. Train response teams on AI system characteristics and remediation procedures. Establish communication protocols for stakeholder notification. Create procedures for system shutdown and correction when discrimination is detected.

Regular Testing and Updates

Conduct quarterly bias audits for high-risk systems

Update AI policies based on regulatory changes

Test incident response procedures annually

Review and update risk assessments as systems evolve

Step 6: Measure Success and ROI

Governance Metrics

Time-to-production for AI systems

Incident response time and resolution effectiveness

Compliance assessment scores and audit findings

Stakeholder trust and satisfaction measurements

ROI Measurement

Risk mitigation value (avoided fines, incidents, reputational damage)

Operational efficiency gains from governed AI deployment

Market access enabled by compliance certifications

Innovation acceleration through clear governance frameworks

Key Takeaways: Your AI Governance Action Plan

The Strategic Reality

AI governance isn't about slowing innovation—it's about building systems that work effectively while protecting your business. The companies succeeding in 2025 aren't avoiding AI governance; they're leveraging it for competitive advantage. With only 14% of organizations having mature, enterprise-wide AI governance frameworks, early adopters gain significant advantages while competitors remain unprepared.

Immediate Action Steps

Assessment: Use our ATLAS™ AI readiness assessment to evaluate your current position.

Foundation: Establish an AI governance committee and basic policies within 30 days.

Monitoring: Implement bias tracking for the highest-risk AI systems.

Auditing: Test existing AI systems for discriminatory outcomes across demographic groups.

Inventory: Document all AI tools and systems currently in use.

Planning: Develop scalable governance processes that adapt to regulatory changes.

Essential Resources

Ready to develop AI systems that expand rather than limit human potential? Contact Fox + Spindle to build a comprehensive AI governance framework that protects your business while enabling innovation.